Choosing the server meant changing some things around within our video content analysis solution to make sure that they work together as well as possible.

So, here’s what we had to change:

- Here, we re-thought the way we scale the workloads. Previously, we had a certain number of input segments and a configured number of services that would process those.Now, the system creates the services to pre-process the input segments, and then they talk to Triton and save the received features. Then the system scales Triton and services. For each batch of 4 services, there’s 1 Triton instance that ensures the most effective load and performance.

- Frankly speaking, we didn’t need to change much in our codebase. We only made the connector and optimized docker images, dumping all the libraries that we no longer needed.

- Now our models call the connector that gets the predictions from the Triton servers instead of calling the library. Also, there are compatibility concerns: if Triton can’t work with the format of a certain model, we convert it to the supported one.

Next, we had to make the whole thing run efficiently. In order to optimize the performance, we migrated our backend to TensorRT. That helped us further tweak the model so that it would run quicker.

On top of that, the models gave us some trouble as well.

Since Triton does not support every model format under the sun, we had to convert the incompatible ones to the right format.

And you can’t just take a pile of models and magically change their format. Unfortunately, there are a ton of things that could go wrong during the process, so we had to oversee it for quality control.

Here’s how we did it.

We have built an evaluation criteria that decided whether there-formatting was successful. Otherwise, our developers had to come up with specific model configurations.

Table of Contents

The perks we got with Triton

Faster model initialization

Say goodbye to sequences of services — now we don’t need to start a service for each segment separately. We just start the Triton server, it initializes all the models and runs in the background.

The services responsible for pre-processing can call the server and get responses immediately.

It makes sense to keep it running in the background: it takes an insanely long time to start. So we implemented the server that initiates models once and then is available at all times.

Balanced GPU usage

With the help of Triton, we can now run inference and pre-processing as two separate processes.

Here’s why that is a good idea.

You see, the CPU runs the pre-processing, and GPUs have to sit there and wait until that process is done. So, we get low GPU utilization: expensive hardware is not used to its fullest capacity.

So we got a system that continuously works on video content analysis.

That allows it to process larger volumes of data more efficiently.

And we are all for it.

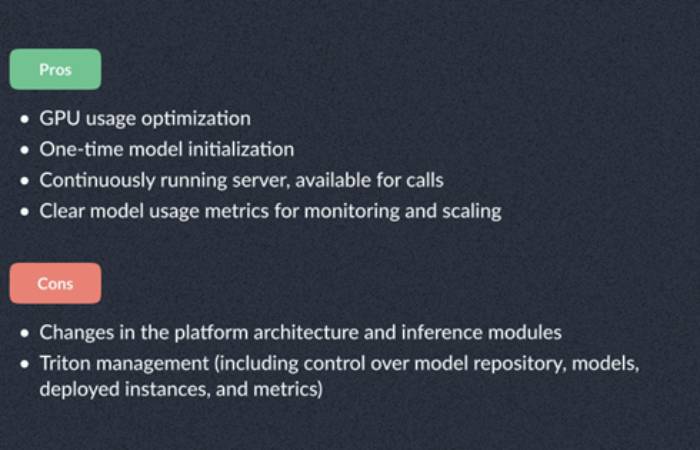

The drawbacks of Triton implementation

As with all things in life, you always trade something good for something bad.

Let’s see what disadvantages Triton server brings.

Well, we have already mentioned the first and the very important one: you’ll probably have to make changes to the architecture and inference models to accommodate Triton deployment.

Another thing you will have to do is establish additional control over model repository, model, deployed instances, and metrics.

In addition, you may get a traffic increase between modules and Triton server, so be ready for that.

And last, the model compatibility. Those not supported by Triton require re-formatting.

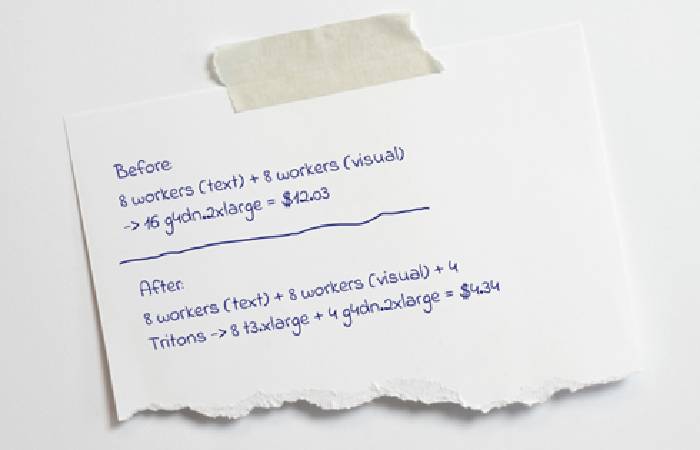

Let the math speak for us

Since we just began using the server, we decided to integrate it into a single end credits detection pipeline to see how this works out.

Even though it is still early in our testing, we are impressed with the results.

The time it takes to process clips hasn’t really changed.

But we did see an increase in the system’s output and scaling potential.

Thanks to the workflow implemented by Triton, we can use fewer GPUs and take more CPU for the same workload.

And that saves us money.

See for yourself: before using 8 workers with a text recognition model and 8 workers with a visual recognition model required 16 g4dn.2xlarge. That cost us $12.03.

Now we can get the same number of workers with two models and 4 Triton instances. For that we need 8 t3.xlarge and 4 g4dn.2xlarge. The cost of that is $4.34.

That’s almost three times less.

So that is settled — we will continue to implement the Triton server across the rest of our pipelines.

Author’s bio:

Pavel Saskovec, Technical Writer at AIHunters, covering tech-related topics, the majority of which center around technology helping optimize the workflow of the media industry.