Table of Contents

Definition Jitter

Jitter is the deviation from the actual periodicity of a presumably periodic signal. It is often used in electronics and telecommunications with a reference clock signal.

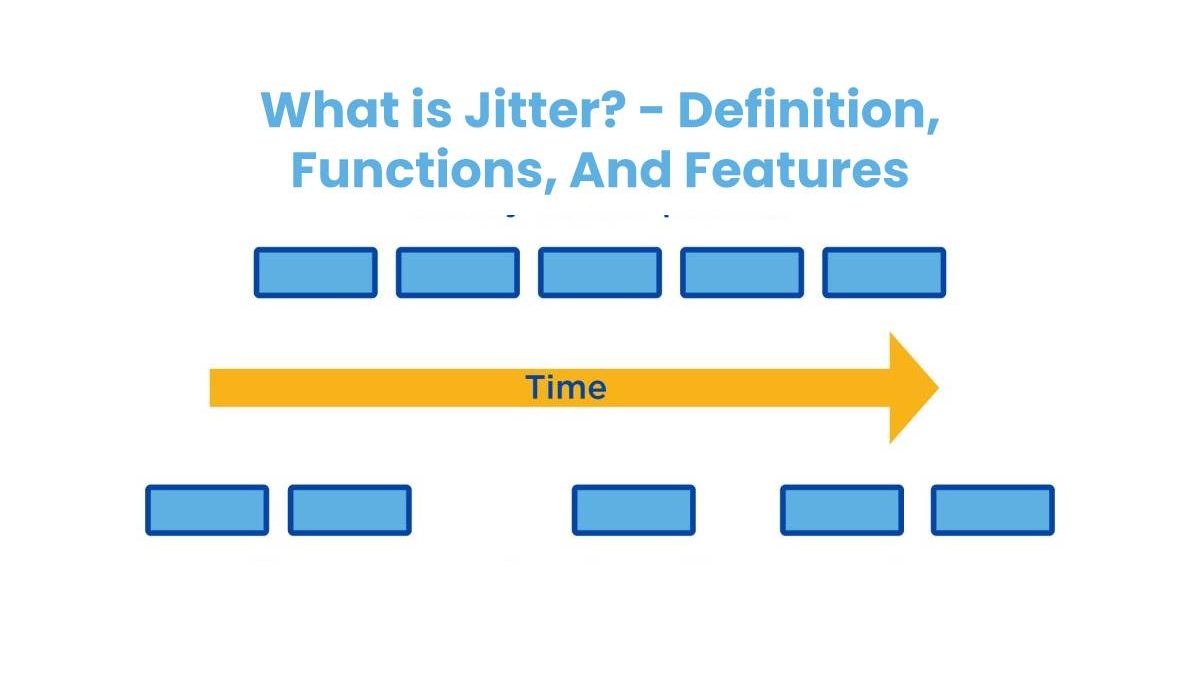

Jitter is also technically familiar as packet delay variation. It refers to the change in a delay time in milliseconds (ms) between data packets across a network, typically an interruption in the typical sequence of sending data packets. It also means that there is a fluctuation in delay as packets transfers across a network. The level of delay throughout all traffic would fluctuate and could lead to a 50-millisecond delay in packet transfers. As a result, there is network congestion due to the way devices fight for the same bandwidth space. Therefore, the more congested, the higher the chance of packet loss occurring.

Functionality of Jitter

When transferring data and visiting a website, the packets will be sent from a server over a network to the user’s computer or device and loaded via the web browser. With the jitter high, there will be three packets that will not ship when requested. When the time is complete, all three packages will arrive at once. It causes an overload for the requesting computing device that leads to congestion and loss of data packets on the network.

Jitter can be like a traffic jam in which the data cannot move at a reasonable speed. It cannot because all packets have reached a junction at the same time, and nothing can be loaded. Then the receiving computing device will not be able to process the information. As a result, the information will be missing. During packet loss, if packets do not consistently arrive, the receiving endpoint has to compensate and try to correct the damage.

Features of Jitter

In some cases, we cannot make exact corrections, and these losses become unrecoverable. In the case of network congestion, networks cannot send an equal amount of the traffic they receive, so the packet buffer will fill up and start dropping packets. Although jitter is considered an obstacle causing a delay, breakdown, or even loss of communication through the network, there are sometimes abnormal fluctuations that, in reality, do not have a very long-lasting effect. In these situations, jitter isn’t too much of a problem because there are acceptable levels of jitter that we can tolerate like the following:

- Tremor that is below 30 ms

- Packet loss of less than 1% of data transfer

- Total network latency less than 150 ms

The figures above show the conditions to consider when the jitter is acceptable. Acceptable jitter simply refers to the willingness to accept erratic fluctuations in data transfer.

For best performance, the jitter should remain below 20 milliseconds. If this exceeds 30 milliseconds, then it will have a noticeable impact on the quality of any real-time conversation a user may have. At this rate, the user will begin to experience distortions that will affect the conversation and make messages difficult for other users. The effect of the jitter depends on the service that the user is going to use. There are some services where the fluctuations will be very noticeable but will remain significant in other services, such as voice calls and video calls. Jitter becomes a problem during voice calls because it is the most frequently cites service where fluctuation is genuinely disruptive.

It is due to the way the VoIP data transfer happens. The user’s voice will divide into different packets and transmit to the caller on the other side. When we measure fluctuation, the average delay time between packets needs to calculate, and we do this in several ways, depending on the type of traffic.

Voice traffic

It is measured based on whether the user has control over a single endpoint or both.

Single End Point

Measured by determining the Average Round-Trip Time (RTT) and Minimum Round-Trip Time for a series of voice packets.

Double End Point

Measured using the instantaneous jitter or the variation between the transmit and receive intervals of a packet.

Bandwidth test

Instead of using math, the level of fluctuation that a user is dealing with can get determined by doing the bandwidth test. Therefore, the easiest way to test the jitter is to do the bandwidth test.